Fixing the Internet for Real Time Applications: Part I

League of Legends is not a game of seconds, but of milliseconds. In day-to-day life, two seconds fly by unnoticed—you took two seconds reading that! In-game, however, a two-second stun can feel like an absolute eternity. In any single match of LoL, thousands of decisions made in milliseconds dictate which team scores bragging rights and which settles for “honorable opponent” points.

I'm a network engineer at Riot, and part of the Riot Direct team that’s obsessing over those milliseconds. If the communication between a player's computer and our servers slows, a player can’t make in-game decisions as well—and it’s rough to potentially let your team down because of a connection issue. My team’s mission is to provide the fastest possible connection for players to our game.

This is the first in a three-part series discussing what problems online games face using the commodity internet as their delivery mechanism to players, what Riot’s doing about it, and what the future holds for real-time applications.

In this first post, I’ll dive into how the internet, while an absolutely amazing piece of technology, wasn’t constructed for applications that run in real time. If your web browser issues a GET request to rottentomatoes.com looking for reviews of the latest Bond film, you probably don’t mind waiting a few seconds if afterwards all the relevant information appears. The situation doesn’t exist where a user of rottentomatoes.com absolutely needs a movie review in the next tenth of a second. Yet, when I play League, I’m constantly devouring information I need that quickly.

I’ll also discuss some components of the internet’s construction and what implications those components present. Plus, I’ll dive deeper into the issue illustrated by the rottentomatoes.com example: how online game communications fundamentally differ from those of other applications. Finally, I’ll talk about how the routing hardware of the internet exacerbates this problem of real-time communication.

A Series of Tubes

The internet is a remarkable tool. It’s capable of delivering incredible amounts of data that power so much of modern living. It has given rise to new business ecosystems, new ways to communicate, and new ways to game. However, in some respects, it’s beginning to reach its limitations. The user experience of applications that require near real-time response times (e.g. online games) suffer due to its design.

The internet is not a single unified system, but rather a conglomeration of multiple entities. When you play League, data transfers from Riot’s servers to backbone companies (like Level3, Zayo, and Cogent) to ISP companies (like Comcast, AT&T, Time Warner Cable, and Verizon) and then finally to you, and vice-versa.

Backbone companies interconnect with ISP companies through fiber-optic cable routes that are built next to train tracks that zigzag across the country and leverage BGP (Border Gateway Protocol) to route traffic. Unfortunately, this protocol creates situations where traffic that should move optimally between one location and another actually takes extremely circuitous routes. The culprit: simple economics. Backbone providers and ISPs route traffic to the lowest cost path, not the lowest latency path. In other words, these companies care that your packets get from point A to point Z, but they prioritize a cheaper route over a faster route.

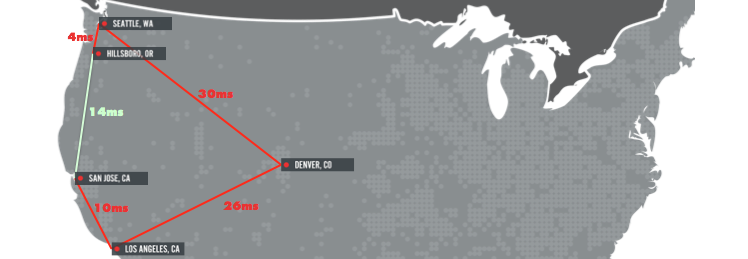

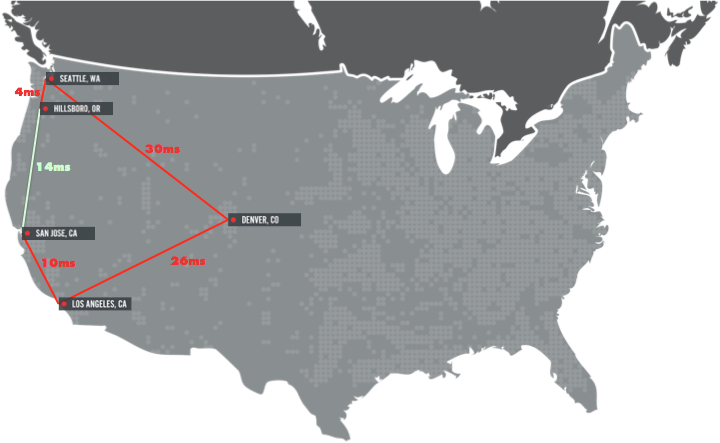

As an illustration, consider a real traffic flow submitted by a LoL player, shown below, that should travel directly between San Francisco and Portland. Instead, it bounces around from San Francisco to Los Angeles, then Denver, then Seattle, and only then to the final destination of Portland. A direct trip may have taken 14ms, but the less efficient route takes a full 70ms. That’s a brutal 500% increase. To the ISPs and their backbone providers, this is acceptable—but not to games like LoL.

For real-time applications like LoL, this circuitous routing causes a great disparity in user experience, especially since different ISPs and backbone providers allow different routes. So, a player in one house might enjoy an online game with extremely low latency while their friend, who’s just next door but on a different ISP, fights against significant lag.

If Only We Could Buffer

The current internet architecture is great for streaming video experiences like Netflix, where the buffering of content mitigates the pain of slower response times. If the buffer gathers five seconds of video, subsequent data can take up to five seconds to arrive. In fact, for applications like Netflix, buffering means user traffic could traverse the earth 100 times and the user experience would be just as good as if it’d taken the shortest path possible. In a game of LoL, however, where the future can’t be buffered since it hasn’t yet been played, those five seconds are utterly unacceptable.

Routers Aren't Helping

Lastly, in addition to the lag and the inability to buffer, network routers—the staple hardware of the internet—exacerbate the problems faced by real-time applications. It has to do with how they interact with packets on the wire.

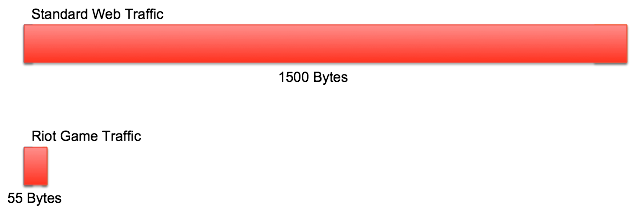

The vast majority of internet traffic breaks down into packets of 1500 bytes in size: consider these the tractor-trailers of the internet as almost every picture, movie, or song transmitted online is sent using packets exclusively of this size. (This 1500 byte payload is a result of the Maximum Transmission Unit of Ethernet version 2. Interested readers can investigate the actual framing here.) In stark contrast, game traffic is usually around 55 bytes in size. Games require constant updates but any individual message is quite small. While Netflix sends a significant amount of information about the next high-resolution video frame, the entirety of a LoL message might be the location of a player’s right-click of the mouse.

The problem with routers stems from a processing overhead that must route a single packet of any size. Routers aren’t counting the size of the packet, just the quantity: so while twice the packets at half the size might be the equivalent total amount of information, that per-packet processing overhead has doubled with no mitigating factors. For League, a constant stream of 55 bytes, compared to the standard of 1500 bytes, is a 27x increase in cost. That’s huge!

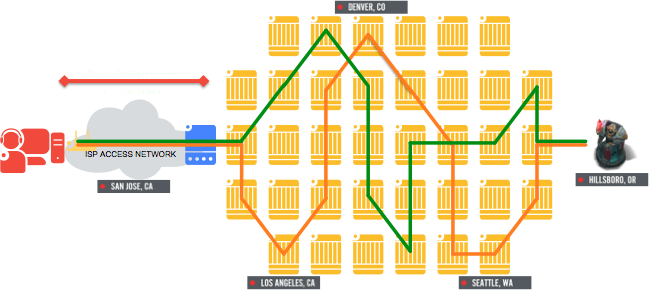

If a router gets more packets than it can handle, it’s forced to simply drop them. The packets wink out of existence and result in extremely frustrating play experiences: other champions dart around the screen, projectiles teleport unexpectedly, and damage occurs without explanation. In two particular ways, the massive increase in packets sent by our game (as compared to the type of traffic the internet was designed for) create scenarios where routers drop packets.

The first issue has to do with router input buffers, where packets are temporarily stalled to prevent overwhelming the routing process. Even though this buffer is allocated on a fixed size, say, 10 MegaBytes, it can only hold a fixed number of packets regardless of size. Once filled, the router has no choice but to drop the next packet that arrives. Since League generates 27x the number of packets, this fills the buffer 27x as quickly. Readers interested in a technical deep-dive can check out the academic paper "Sizing Router Buffers" from the smart people at Stanford.

The second issue faced by online gaming traffic is the treatment that many (but not all) routers apply to UDP versus to TCP packets. Most games transmit traffic over UDP because of the increased efficiency and speed. However, when the actual route processors of internet routers begin to become overwhelmed by traffic, many simply start ignoring UDP packets. These packets are immediately dropped and never recovered.

This behavior is a consequence of the reliability guaranteed by TCP and absent in UDP. If a TCP packet fails to arrive, the recipient creates a new packet and sends it to the original sender to request the lost information. All of this ensures a TCP sender’s message will be eventually fully received. Internet routers are aware of this TCP behavior and know that, when overwhelmed, dropping a TCP packet will only immediately result in the creation of a new packet. In short, they’ll have achieved zero progress in lessening the strain on the system. On the other hand, a dropped UDP packet simply disappears and lightens the overall load. Note this is also an issue with reliable UDP (a construct to ensure ordered delivery of UDP packets often utilized by online games), as internet routers are incapable of differentiating normal UDP from reliable.

With all this in mind, consider how the circuitous routing resulting from BGP results in many more routers being involved in a single trip. If a direct (and oversimplified) route consists of a single router on either end, a packet hits two routers. If BGP bounces that traffic over 4 intermediate hops, a packet hits six routers, meaning the overall load on the system has increased three-fold. We’re now on our way to overwhelming routers.

Wrapping Up

All of this together adds up to an internet that's far from ideal for League of Legends: the resulting latency and packet loss make for frustrating experiences in our game of milliseconds. Real-time gaming traffic requires a new approach—one that eliminates the inefficiencies of indirect fiber routes, BGP, and router configurations. In my next post, I’ll discuss the technical details of the approach that the Riot Direct team is using to tackle this problem and share what’s working and what we want to improve.

For more information, check out the rest of this series:

Part I: The Problem (this article)

Part II: Riot's Solution

Part III: What's Next?