Deep Dive into the Clash Crash

Hello! My name is Tomasz Mozolewski, and I’m a senior software engineer on our Competitive team. My team is responsible for projects like Champion Mastery and Ranked. I’m here to talk about an event that has sparked a lot of discussion about League tech, and which happens to be one of the most requested Tech Blog topics of all time - Clash.

I’ve been working on Clash for the 3 years it’s been in development, and I’ve witnessed it all from behind the scenes. The excitement for launch, the panic during crashes, and the final build toward confidence - we were feeling it all as intensely as our players were.

In this article, I’ll give an overview of the technical overload hindrances that caused us to cancel Clash. I hope I’ll be able to answer some of the long-standing questions from players and fellow technologists alike - what exactly happened? And why?

I’ll start with an explanation of Clash for those who aren’t familiar with the game mode, then take you through a timeline of events and causes, and describe our ultimate solution for finally successfully getting Clash out to players.

What is Clash?

Clash is League’s take on an accessible tournament mode, making it possible for teams of players to compete in a bracket for a coveted prize. While it’s meant to feel similar to professional esports like the LCS, Clash is a condensed experience with a more simplified setup aimed at all ranked players, regardless of skill level. Instead of it taking months to get through a bracket, the entire Clash tournament takes place over one weekend with a limited number of games. To ensure the experience feels as much like a pro game as possible, we kept many of the same systems - brackets, scouting, drafting, and (of course) shiny rewards.

There’s something special about competitive play - practicing with a premade group of 5, scouting enemies, choosing picks and bans, feeling the pressure before big games, and having it all pay off with a trophy. Clash was a priority for our team from the beginning because we knew players would love experiencing the excitement of a tournament from the comfort of their homes.

It took several iterations to get Clash in the stable, playable state it’s in now. Let’s take a look at how it all went down.

The Big Crash

By now, most people in the League community are familiar with the unfortunate crashes that soured the first large-scale Clash weekends. So what caused these? Now that we’re confident in the current state of Clash, I’m looking forward to giving some insight into just how complex a tournament mode system can be.

The first thing to note is that we wanted to launch the initial iteration of Clash as fast as possible - our goal was originally within 6 months. Which meant taking shortcuts. Prioritizing workloads is pretty standard for any kind of engineering, but the shortcuts we had to take generated a lot of tech debt. Because we were working in an old monolith system, much of the work required to keep Clash working was manual. Our initial plan was to make sure we were gathering feedback from players as early as possible, because we figured that once we confirmed we were going in the right direction, we could sink more development resources and time into perfecting and polishing it.

Timeline of Events

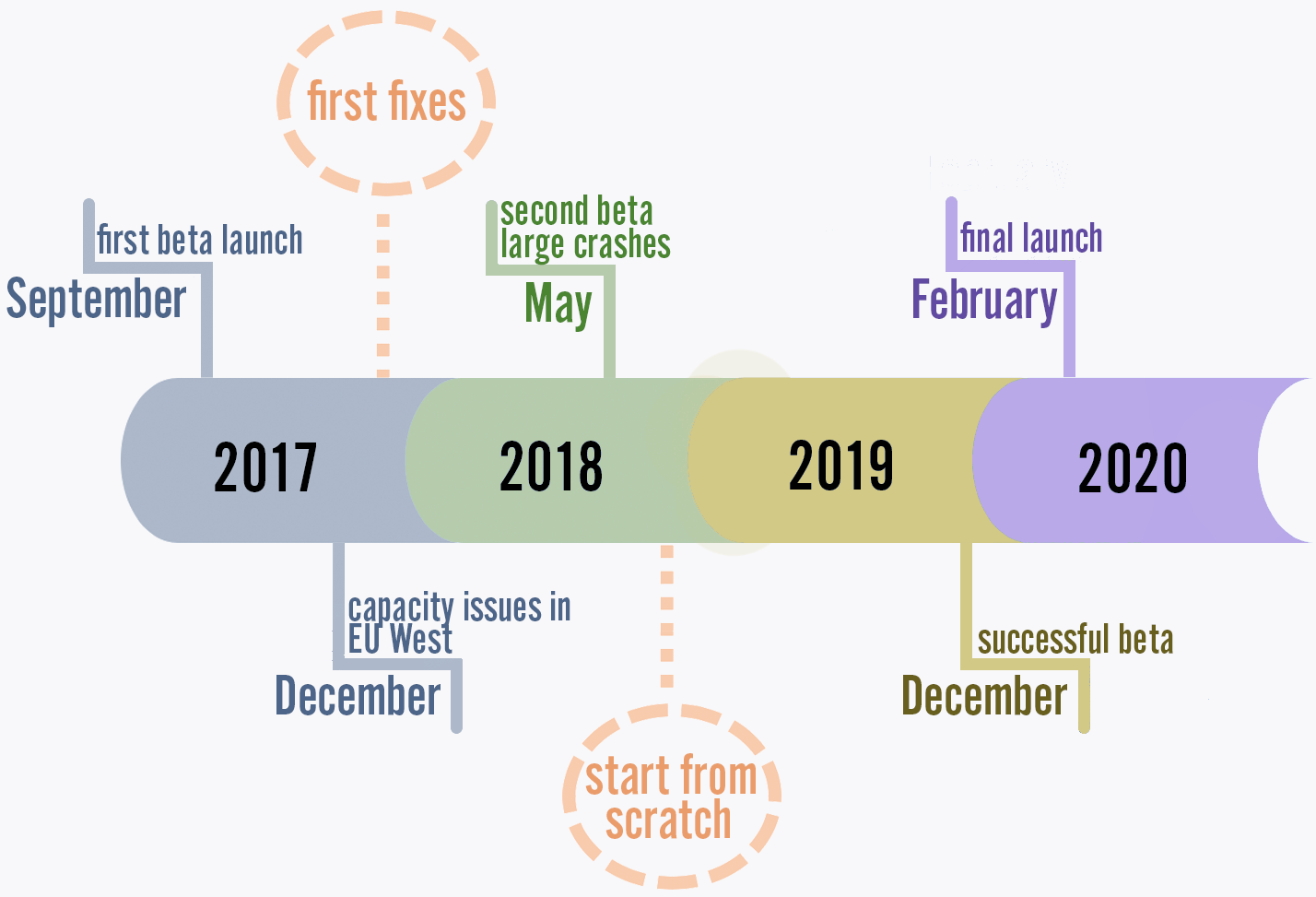

Our first beta in September 2017 in Vietnam went really well. We were thrilled to see how much players loved playing Clash, and we identified some small fixes and adjustments to improve the experience. After these improvements, we decided to launch in Europe in December 2017. This launch was less successful. EU West pretty much instantly ran into capacity problems, which was our first indication of scalability issues.

We decided to do controlled testing on smaller regions, and apply what we learned from them to our larger regions. This led us to our first set of overload fixes.

First Fixes

We made small tweaks to tangential systems, but the underlying overload issue remained a consistent problem that kept us awake at night. Some of us still can’t sleep when a tournament starts, and find ourselves checking to make sure everything is fine.

To solve the overload, we started with a trivial solution - limiting the game starts to a sustainable rate.

We determined safe rates for each shard’s Champ Select service via load testing, extending scouting periods until a match could start, and were confident enough to run another beta in May 2018. With this beta, we saw that this solution worked great for game launches, but having all the games running at once caused everything from high latency to games just shutting down mid-match.

We moved from one bottleneck to the next. We considered limiting the number of players who could play Clash, which would’ve been an easy fix mid-tournament, but we abandoned that pretty quickly. So many players reserved time to play Clash and had prepared for it - it wasn’t right to settle for a solution that meant some players would miss out.

During this May 2018 beta, due to all the overload issues we were seeing in Asia, we had to come to terms with the fact that there would be no quick fixes, and that we would have to redesign all of Clash from the ground up. We had to cancel Clash in Europe and the Americas due to game server overload problems.

We were absolutely devastated.

After days of digging into the events of that Clash weekend, we discovered the primary source of the crashes - all the game starts happening at the same time would overload systems like Champ Select. We had done limited load testing, but to create a load test that would fully capture the enthusiasm of our player base would require building out a replica of our largest shard - which was very expensive - and then taking another 3 months to develop the test properly. And even then we knew we’d be relying on assumptions about player behavior that weren’t necessarily accurate. After working evenings and weekends, pushing through a ton of fixes and improvements, we had to accept it.

Clash in its current design would never be successful.

Seeing the enthusiasm and excitement about Clash when it worked - and the frustration, anger, and crushing disappointment when it didn’t - kept us working day and night for a solution. We didn’t want to give up on Clash, even if it meant redoing core League systems. Giving up wasn’t an option.

The Clash Solution

After a short break where we fully reset our thinking, we were ready to tackle the problems with fresh minds full of ideas.

We already knew load testing would be too expensive from both a time and cost perspective. It would require us to run a load test before each tournament to account for all changes to systems and player numbers. We needed something that could be calculated automatically and self-correct with any changes.

Initial Steps

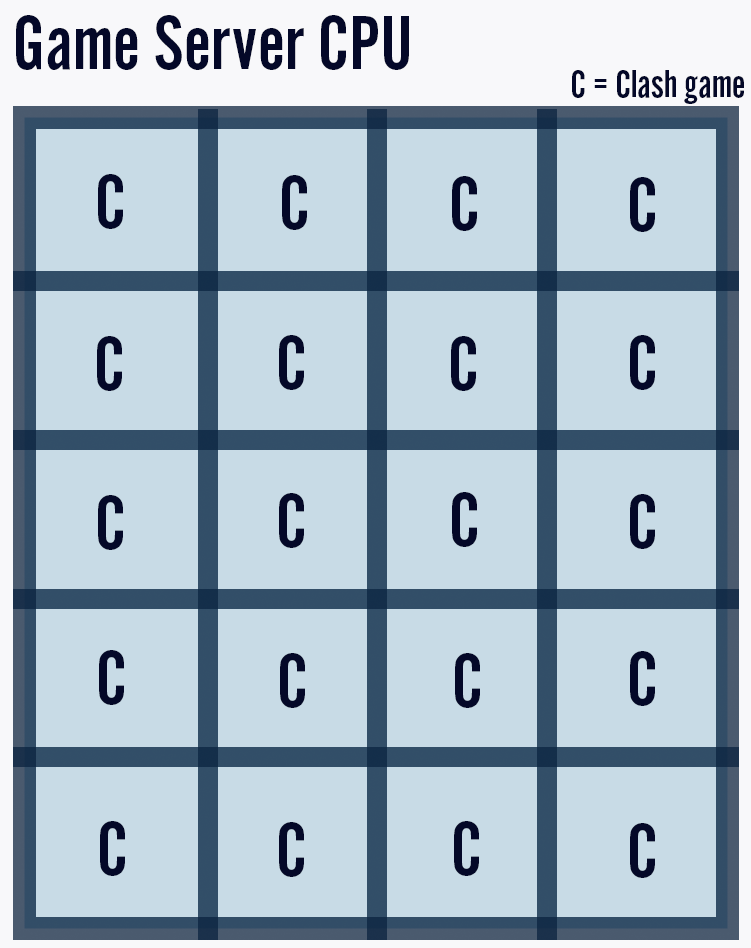

Ultimately, there were 2 major resources we needed to protect - game starts and game servers. We decided to first mitigate the number of game starts by adding more control over how many happened simultaneously. We could then track the number of game starts happening at once to make sure those numbers stayed within a safe range. To do this, we’d have to figure out the maximum number of safe game starts, taking into account the lifecycle of a Clash match (and its CPU requirements) from the perspective of the game server.

Mitigating Game Starts

First, we built out a system that would give us more control over the number of game starts happening at once by splitting player populations into smaller groups. We distributed game starts over a longer period of time by having tiers lock in at 30 minute intervals, and started immediately putting players into brackets when we found teams with similar ratings, which further distributed possible simultaneous game starts. To successfully achieve this, we need to keep in mind how the delay in CPU usage impacts how we capture game data.

Considering the Delay

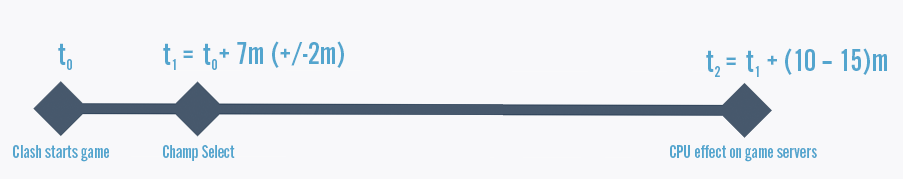

When Clash submits a game to start, it takes several seconds to initiate Champ Select, and Champ Select itself lasts around 7 minutes on average. The game then travels to the game servers, and then after approximately 10-15 minutes (of early game, which doesn’t take much power), we start to see teamfights, which take the most CPU. We need to optimize for this large CPU usage which only happens around 25 minutes into a game, so any changes made to the Clash system to protect the infrastructure from overload take significant time to propagate and take full effect. This means any solution we came up with would need to take this delay into account to avoid stressing downstream components beyond their capacity.

Calculating Limits for Game Starts

There are several systems on the backend that game starts travel through, and they each have limits, with Champ Select being the most restrictive. The common thread across all League game modes is how the system processes game starts. This means we could use our usual traffic numbers for all League games to estimate a reasonable and safe number of max game starts.

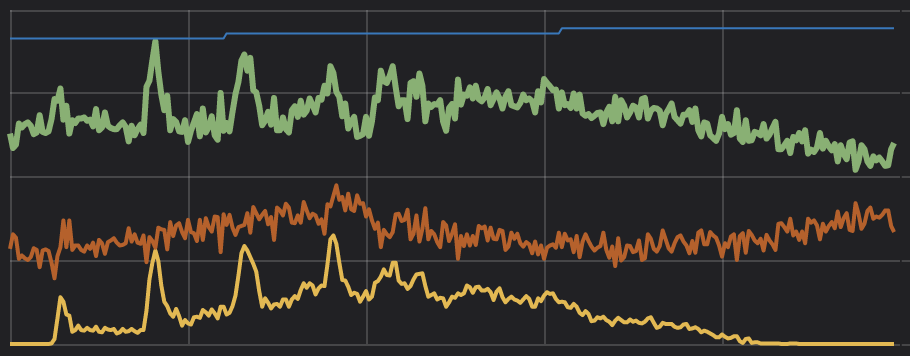

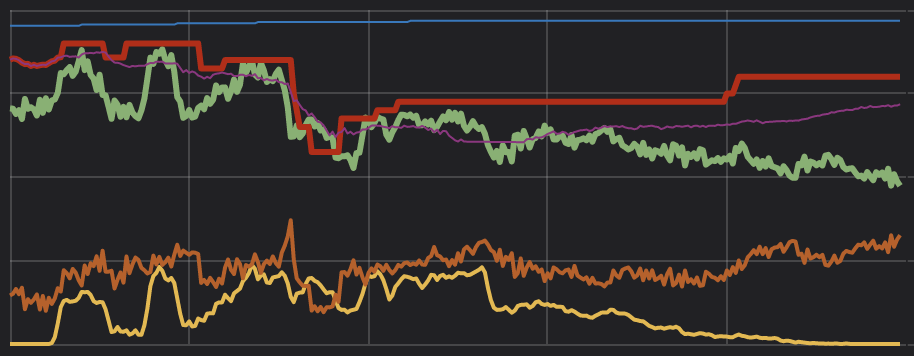

To determine safe limits, we tracked how many games started each minute of every day and calculated out the 99th percentile of the number of game starts. We used this 99th percentile value as a starting point for calculating an accurate number of typical game starts that could safely happen - but running Clash at peak would mean we’d only be able to start games as quickly as the peak, which was too slow. So we pushed it higher and higher until we settled on 1.5x the 99th percentile value of our typical peak. This could be safely sustained while maintaining a reasonable game start rate so players wouldn’t have to wait too long for games to start.

We also added some min/max clamps to make sure that Clash game starts wouldn’t drop too low in small shards or rise too high in large shards. For the most part, this approach worked pretty well. For example, during the COVID-19 outbreak, we’ve seen a significantly increased player population and have added several servers which have self-adjusted very well.

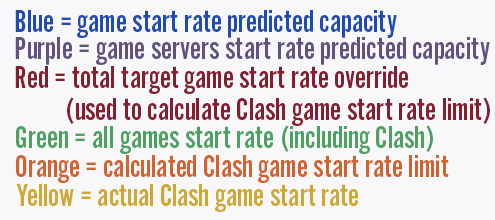

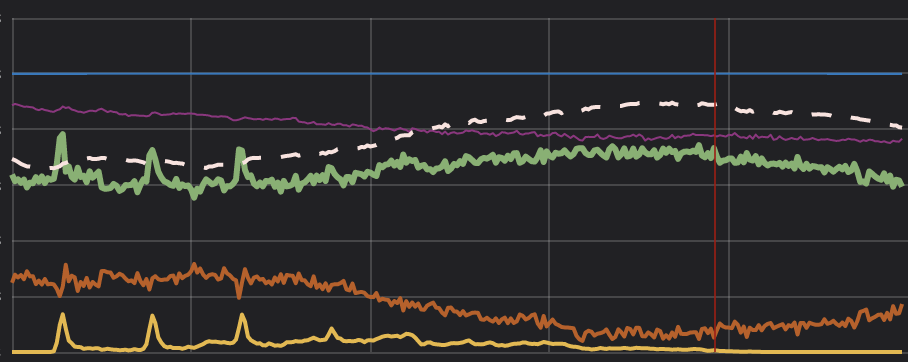

Example: LA on 3/15/20. Peaks are tier start times.

Calculating Game Server CPU

The primary limiting factor for game servers is CPU - when CPU approaches 85%, players will start to see latency, missed requests, and other unfortunate issues that cause tilt and frustration. To ensure a high quality game experience, we need to keep game server CPU load at a safe level.

To do this, we started with a simple approach of dividing the target CPU% by the average CPU% that a game uses. We applied this number to all game servers based on hardware, and summed them up to obtain the total number of Clash games we can run on all servers. We divided that by average game length, and voilà - we had our initial safe game start rate per minute.

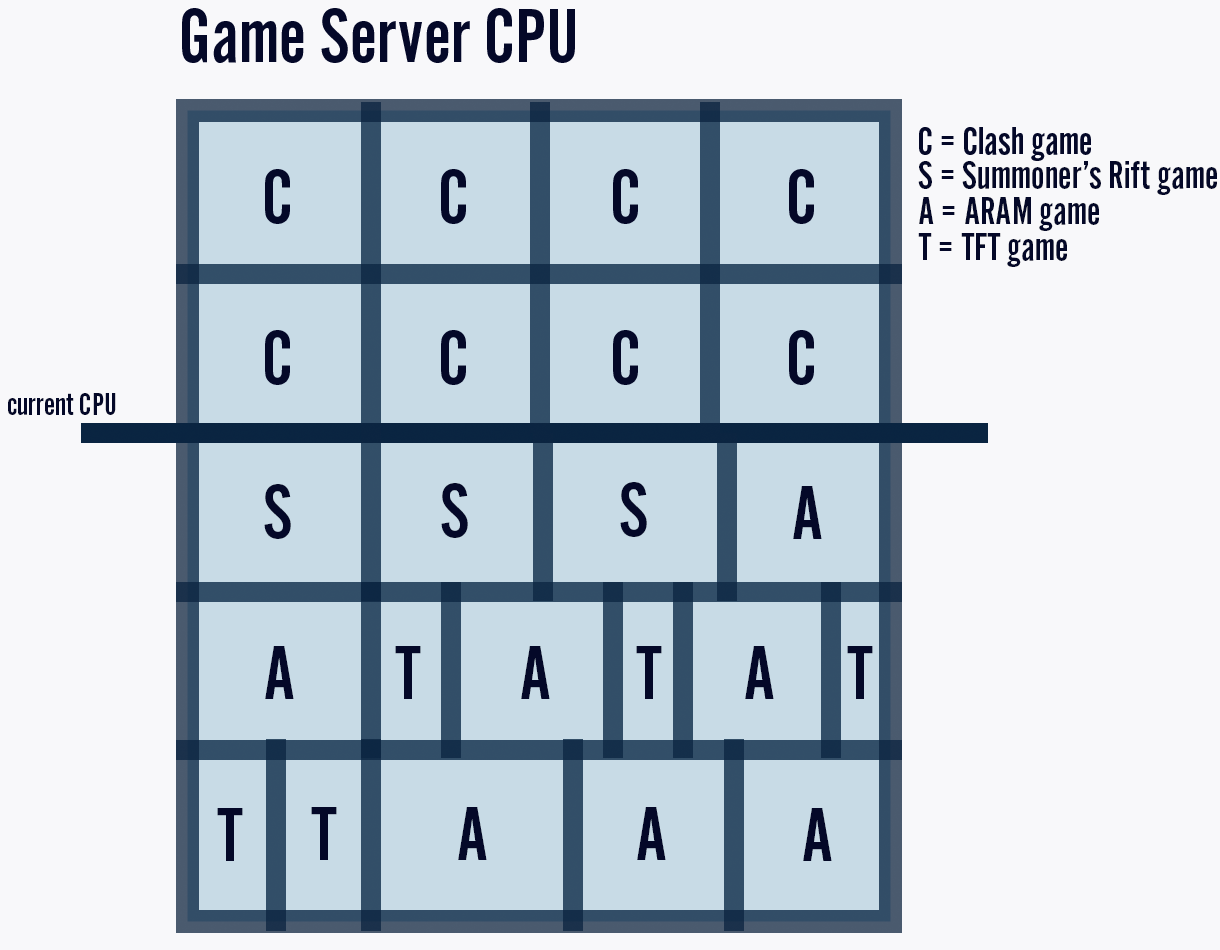

But capacity calculated this way was underestimating the true capacity, because ARAM and TFT games were being counted as if they required the same amount of CPU as games on Summoner’s Rift (where Clash is held), even though they take significantly less power. In other words, we could actually safely handle more games than this calculation initially indicated. This extra 10-20% buffer meant the Clash game start rate at peak was much lower than we could actually handle, meaning players would have to wait much longer than necessary for their turn. By manually overriding calculated values, we were able to squeeze out all the available capacity.

This is how we were initially calculating our capacity. As you can see from this graphic, each game takes up an equal amount of CPU with this model. Later, we’ll look at a comparison with a more realistic view of CPU usage.

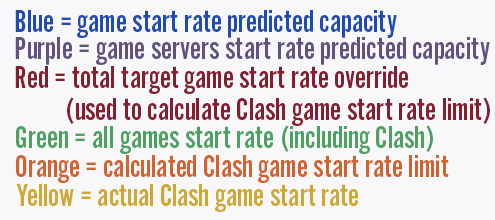

Adjusting the Solution with Manual Overrides

We created a manual override to help us tweak the numbers to make sure we were collecting data effectively. By this point, we had our game start and CPU limits in place. With the manual override, if these limits failed, we could use the data we had to manually set limits, which would allow the tournament to continue (though it would require a lot of monitoring and adjustments).

Initially, we used this feature quite a bit. We had included different game modes while coming up with our limit calculations, but because we were running calculations at individual points of time instead of continuously, our estimation kept falling short. By checking the capacity at a specific point in time, we would capture games at different points of the match lifecycle, which required different CPU for different game modes. This led us to the creation of an automated data solution, which would constantly collect data on different game modes and CPU usage to more accurately capture our game server capacity and dynamically adjust game start limits accordingly.

Example above: EUW on 3/15/20. Flat red line is manual override.

Example: NA on 3/14/20. Red line is the automatically calculated limit.

Automating Data Collection

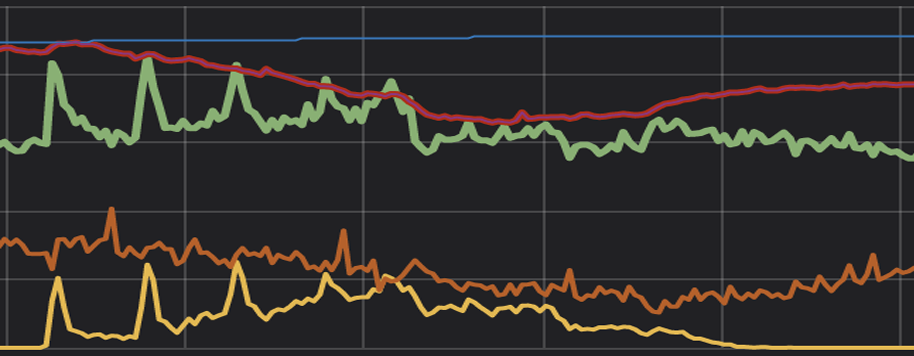

To help with our estimations, we started collecting data in realtime, which meant we were dynamically predicting capacity.

The first calculations collected data on how many games were played on average, if every game was a Summoner’s Rift match. We calculated how many of these matches could occur concurrently without going over our target (70% CPU usage), and came up with a number of matches that could safely start per second.

Then we took into account the fact that many of those games in our first calculation were actually other game modes, like TFT or ARAM, which take different amounts of CPU. Our automated data collection allows us to query every game server once a minute to ask how many games of each type are running, and the total CPU usage. By subtracting that from our target 70% CPU, we’re able to calculate how much CPU is remaining, and stagger out how many more Clash games can start.

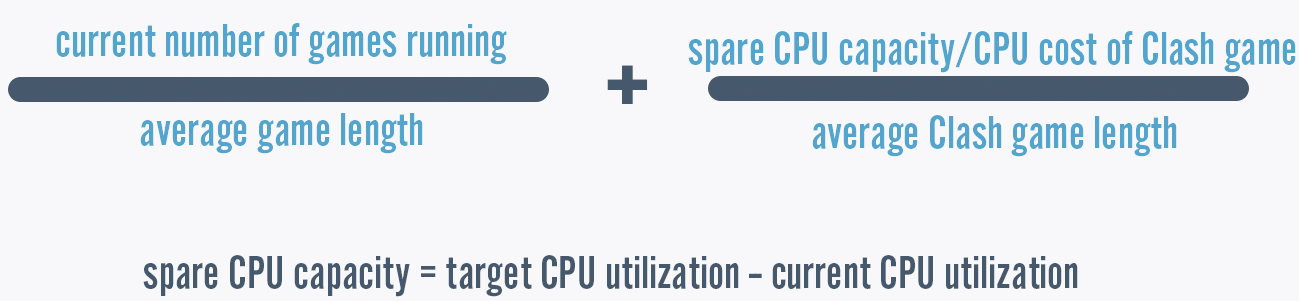

The formula was ultimately simple - current number of games running divided by average game length plus spare CPU capacity divided by CPU cost of Clash games divided by average Clash game length. Empirical results showed us that this metric has higher errors at low CPU load, but becomes very precise at high CPU load - which is the critical time to get things right. In other words, the closer we get to the danger zone, the more exact our numbers are.

After our automated data collection system was put into effect, our model looked a little more like this.

Example: KR on 3/15/20.

Clash Launches Successfully!

We finally made it! Now we have a dynamic way to throttle game starts for Clash that has proven to be extremely effective. I still get butterflies in my stomach every time we open the tournament gates - I find myself checking logs and metrics to make sure everything runs smoothly. I have an app on my phone that notifies me when things go wrong, and recently it’s been staying quiet.

Reflecting over the past 3 years, the thing I remember most - more than the frustration or the technical challenges, or even the final celebrations of a successful launch - is the number of Rioters who put their hearts and souls (and time!) into resolving issues to bring the Clash experience to players.

What kept us going through late nights and error messages?

The players. Of course! Their delight with Clash when it worked, and their comments expressing both excitement and frustration throughout development fueled us. We were committed to bringing the Clash experience to all players, and the overwhelming response from the player base gave us the courage to continue fighting until we got it right.

Thanks for reading! If you have any questions or comments, please post them below.