Thinking Inside the Container

Containers have taken over the world, and I, for one, welcome our new containerized overlords.

They do, however, present interesting challenges for me and my fellow Rioters on the Pipeline Engineering team. My name is Maxfield Stewart, and I'm an engineer here at Riot. My team focuses on build pipelines—everything from code check-in to the artifacts we deploy, and sometimes beyond. If continuous delivery was a theme song, we'd be singing it a capella style. We manage one of Riot's largest internal server farms and virtual machine clusters because we operate a cloud-like environment. One beast is called the "Build Farm"—what started life years ago as a small cluster of boxes building League of Legends game clients is now a monster conglomeration of physical and virtual machines.

Lately, we've been adding Docker containers to that mix at a furious pace. How can we integrate containers into a classic build farm stack that with each passing day looks more and more like a self-service cloud-based work engine? Can we somehow use Dockerfiles to define build environments and integrate those with much of our common open source infrastructure? Can we possibly break away from a traditional Virtual Machine cloud and embrace a containerized future?

The list of questions goes on for quite a while—kind of like an Ashe arrow. I'm introducing this series of blog posts where I'll walk through how my team is attempting to answer them. In this first post I provide some background context as to where we came from and why we want to do this. In subsequent posts I’ll share some concrete ways we’re getting our hands dirty with Jenkins and Docker. The first of these tutorials, a basic introduction, can be found here. If you're interested in learning about ways to use containers to create build farms, inspire continuous delivery, and enable engineers to deliver faster, then this series is for you. But be ready: I'm going to take it from basic introductions to deep in the weeds over time. As a result I hope this series will become a great Introduction to Serious Business Docker Yo.

First, let me rewind to a year ago when we brought continuous delivery to League of Legends. Before that, we were desperately trying to ship League to players on a regular cadence but our tools were struggling. So we re-invested in automating everything — from our build pipelines to our test environment creation — and enjoyed massive returns on making the transition, including benefits to our delivery consistency, build times, and overall ability for teams to get work done. League of Legends went from only a few viable builds a day to over 30 builds a day.

A League of Legends build is no joke. It's over 150 odd jobs, and we build every flavor of League of Legends we care about. Our builds come in all shapes and sizes, traditional debug builds to various forms of future content, as well as variations for all of our global partners, like Tencent and Garena. We were able to track every single build, where it went, what test environments it was deployed to, and what was running in test, PBE, and production with the click of a few buttons. We became able to create test environments at the click of a button and went from 20 or so internal test environments to over 70 in a matter of weeks. That's over 450 virtual machines! What's more, League game code is only a fraction of what we build here. The farm itself sits happily at over 3,300 build jobs supporting all kinds of engineering teams at Riot.

Still, the process wasn’t perfect: as with all software, at times older tools required shims and bridges to make everything work together. We grounded our move to continuous delivery in four core principles we still hold today:

- We believe that engineering teams have to be able to totally own their technology stacks, down to administrative level control of their build environments.

- We believe in Configuration as Code. Teams should maintain their build pipeline and environment in source control whenever possible.

- We believe that every time an engineer performs a build, they need to build a shippable version of their software in all deployable configurations. A “build” is not a code compile; it’s the entire set of deployable artifacts.

- We believe that shipping is a product decision. Product teams need to be able to deploy and see the latest shippable version at the push of a button.

To achieve these goals we needed a world class tech stack that could work within the range of these concepts. Our first option was to completely build our own from scratch. Another, to buy someone else's tools for the job. A third, to go with an open source project and throw in custom solutions where appropriate.

We opted for the third option. For this blog I don’t want to compare and contrast CI tools, but, ultimately, we felt focusing on open source tools and modifying them to meet our needs was the best middle ground between not having to write everything from scratch; having a large open body of collaboration; and, having the freedom to easily deviate where appropriate.

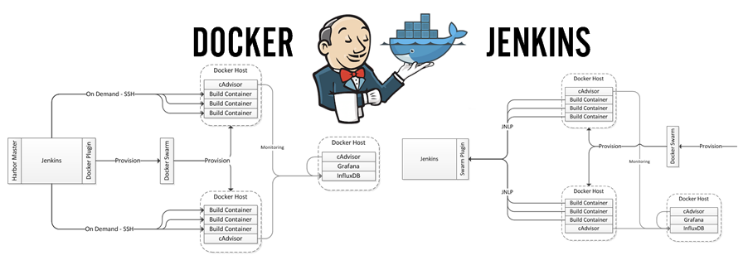

As a result, our tech stack to achieve this was surprisingly simple:

- Jenkins (Open Source Version)

- The Job DSL plugin for Jenkins

- The Build Flow plugin for Jenkins

- Engineering ingenuity to glue our various components together

We chose and continue to use Jenkins for its flexibility, open source nature, and relative ease at handling many of our base case build operations. At a high level that includes the ease of creating a build pipeline that meets our core continuous delivery requirements (mentioned above). As a widely used open source tool, we also had a vibrant community to be inspired by and collaborate with. Instead of authoring custom tooling from scratch, engineering teams could leverage implementations of open standards. That’s helpful, but also risky. Those standards continually evolve, and the technology can be a moving target: what was a good idea yesterday might become a bad idea tomorrow. However, when combined with the right choice of plugins and some simple engineering know-how, we were able to achieve fully-automated continuous delivery chains from a very small set of code and configuration with very little overhead.

So where does Docker play a role? Let’s return to one of the core principles around continuous delivery I mentioned earlier. Recently, one challenge my team tackled was ownership of build environments. Previously, we assessed tools like Packer.io by having individual engineers define images for their virtual machines, and then worked out the security hurdles involved in giving product teams root access to their farms. We essentially designed an internal cloud wrapped by a workflow engine, in this case, Jenkins. We explored common configuration management options, like Puppet and Chef, to complement this virtual cloud solution and enable engineers to control these machines.

And then Docker happened.

One thing became immediately obvious: we could maintain a Dockerfile easier than any other tool we’d had. Suddenly containers, which we’d been aware of for some time, had been brought within easy reach by Docker. If we could combine our approach to build environment ownership and Docker’s concept of Dockerfiles, we’d be in engineering heaven.

Docker is awesome at solving deployment challenges. While I’m focusing here on how it can help workflow engines, build systems, and pipelines, we’re also rapidly gaining familiarity with it as a deployment tool and methodology. Riot operates a lot of microservices and containers + microservices is a bit like peanut butter and chocolate. Because Docker is becoming a "Thing (tm)" we’ve started exploring if it can solve other problems as well.

The Pipeline Engineering team's dream became very obvious: we want a build pipeline generator that can dynamically spin up a continuous delivery pipeline from scratch using framework code for a given technology—essentially, push-button build environments on demand. Where we were automatically provisioning a virtual machine before, we believed we could now use Docker containers to create entire build pipelines.

To be clear, Docker isn’t a perfect turnkey solution. It’s not (yet) solving our Windows build environment problems, and it may never solve our OSX ones. Not every tool we use right now behaves well or even considers containers as its choice of platform. However, thus far Docker appears to take a giant leap forward in solving our challenges on Linux. At Riot, we expend a large amount of engineering effort on our platform and backends. Nearly all of our features contain core backend services delivered globally on micro-services that mostly run on Linux. So improving this solution space is well worth our time spent.

We’ve already begun work on exploring the value Docker adds to our current build stack and garnered several early wins. We’ve created push button development environments for Jenkins by deploying it in containers, speeding up the testing and debugging of everything we do. We’ve started running a small farm (around 500 jobs) using containers as build environments and the teams using them provided positive feedback around ownership and speed of iteration. In fact we've already seen some of the following results:

- Engineers building microservices and websites based on Linux can now programmatically define their build environments.

- Engineers can work locally with their build environment the same way it will work in our build farm (in a future blog I'll even teach you how to do this).

- Dynamic allocation of resources means we can reduce our overall compute footprint and get far more cost efficient.

- One virtual machine is handling the build work of 4 different teams and 300+ build jobs—that's the work that used to be handled by 8 different virtual machines!

With all that said, this blog is just an introduction to a series that dives into these topics. This series will cover several areas as it progresses, with most of the posts in tutorial format with samples and source code provided. It’ll start with a look at deploying Jenkins with Docker, including some lessons learned and a few opinions on best practices. This will give you solid foundations in basic Docker principles by using a real world application as the example. Next, it’ll explore options for containerized build environments and walk through a few things we do at Riot with Docker that aren’t part of the larger Jenkins ecosystem. Lastly, it’ll follow along with the Pipeline Engineering team as we center on a final approach to our overall goal and explore tools we’ve created or modified along the way.

At its core, this series is mostly about the journey. As asserted before, we know what we want: build environments defined as Docker containers, owned and maintained as Dockerfiles by the dev teams that use them. We want the ability to pre-generate build pipelines for known technology stacks so teams can maintain continuous delivery build pipelines running the first day they create a code repository. We have some ideas on how to achieve that, but our process is iterative and involves discovery and this blog will follow along.

Our goal is to share with the wider world discoveries as well as setbacks. A lot of what I’ll be writing about here isn’t really a big secret, but also isn’t easily discoverable in one place. I hope our series will give back to the community that got us this far and in return elicit comments on our approach. Through dialogue we can learn something!

For more information, check out the rest of this series:

Part I: Thinking Inside the Container (this article)

Part II: Putting Jenkins in a Docker Container

Part III: Docker & Jenkins: Data That Persists

Part IV: Jenkins, Docker, Proxies, and Compose

Part V: Taking Control of Your Docker Image

Part VI: Building with Jenkins Inside an Ephemeral Docker Container

Part VII: Tutorial: Building with Jenkins Inside an Ephemeral Docker Container

Part VIII: DockerCon Talk and the Story So Far